Harvard Medical School (BIDMC) and Massachusetts Institute of Technology (CSAIL), USA

Authors:Dayong Wang, Aditya Khosla, Rishab Gargeya, Humayun Irshad, and Andrew Beck

Abstract:We tackled the problem of automatic cancer metastases detection by integrating deep learning and traditional machine learning algorithms. We first trained a deep convolutional neural network (CNN) on ~290K randomly selected tumor and normal patches that were extracted from hundreds of training whole slide images. The architecture of our CNN was based on the 22-layer GoogLeNet developed by Szegedy et al. (CVPR 2014). We then applied the trained deep model to partially overlapping patches from each WSI to create tumor prediction heatmaps. After evaluating the accuracy of the heatmaps, we extracted additional training patches from the false positive regions, and we trained a final model to create tumor prediction heatmaps using the combined training data set. For the slide-based tumor classification task, we extracted a set of geometric features from each tumor probability heatmap in the training set, and trained a random forest classifier to estimate the probability that each slide contained metastatic cancer. We then applied these models (CNN and random forest classifier) to the test images to provide a slide-based estimate of the probability of cancer metastases. For the lesion-based tumor region segmentation task, we applied a threshold of 0.90 to the tumor probability heatmaps and predicted tumor location as the center of each predicted tumor region.

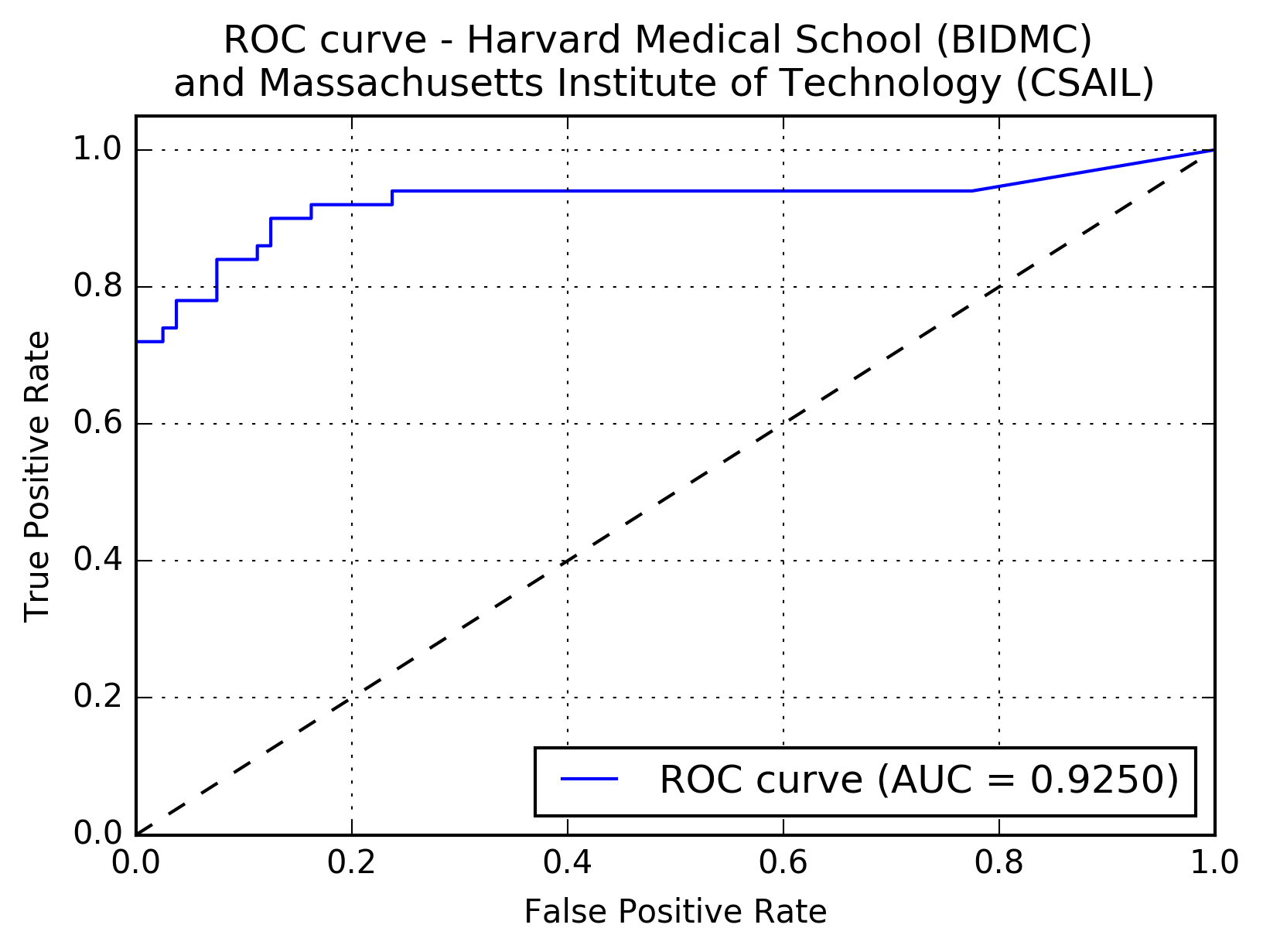

Results:The following figure shows the receiver operating characteristic (ROC) curve of the method.

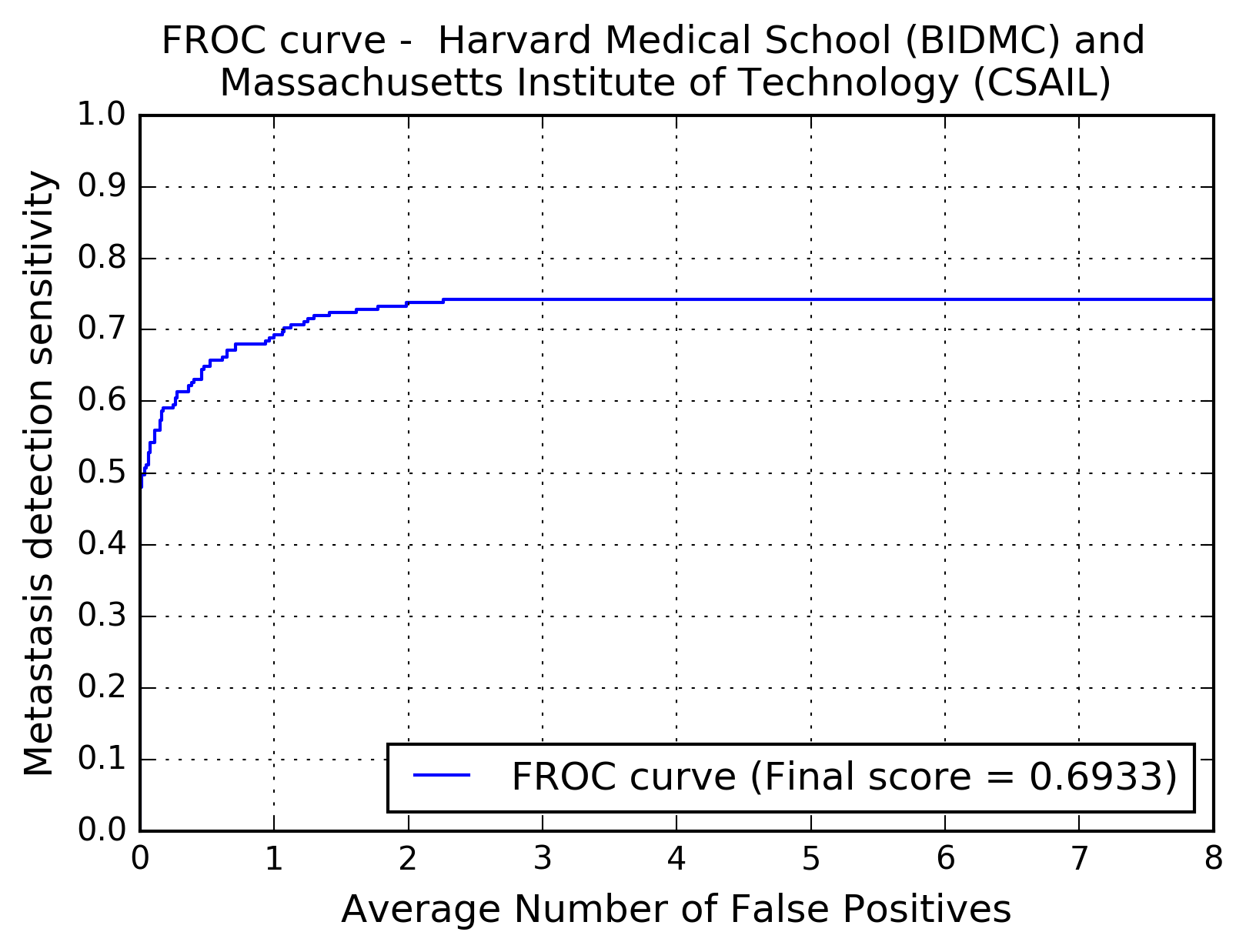

The following figure shows the free-response receiver operating characteristic (FROC) curve of the method.

The table below presents the average sensitivity of the developed system at 6 predefined false positive rates: 1/4, 1/2, 1, 2, 4, and 8 FPs per whole slide image.

| FPs/WSI | 1/4 | 1/2 | 1 | 2 | 4 | 8 |

| Sensitivity | 0.596 | 0.649 | 0.693 | 0.738 | 0.742 | 0.742 |